Clippy

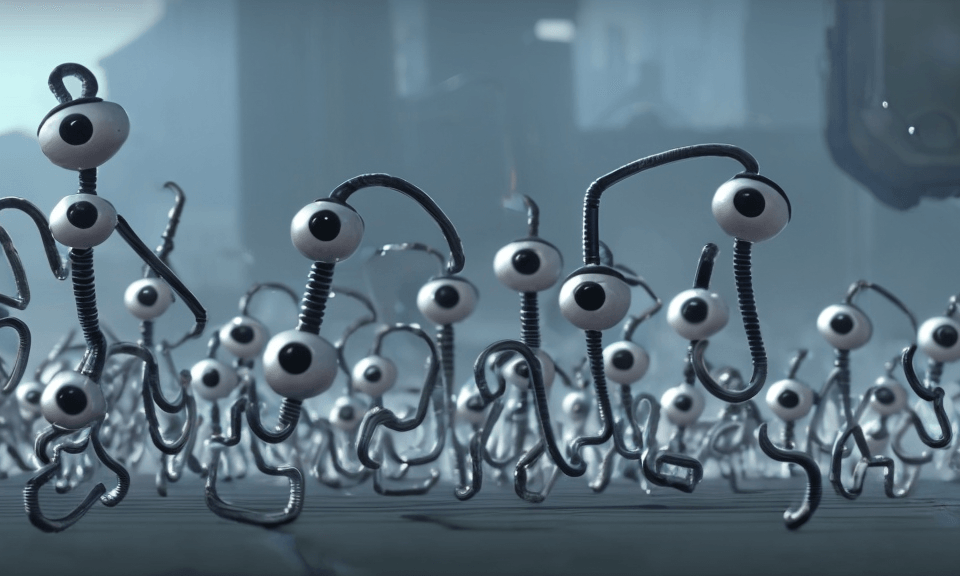

Every semester, the Media Lab at MIT throws a 99Fridays party, which is a celebration of self-expression creative energy. The theme for the party in April 2023 was "Conquered By Clippy" - an imagination of a world where Clippy becomes superintelligent and takes over the world. For my contribution, I used Dreambooth to fine-tune stable diffusion on images of Clippy, and generated video content of the Clippy army that conquered Earth.

Dreambooth

Dreambooth is a framework that allows you to inject a new object tag into Stable Diffusion. It is commonly used to train AI on a specific human face (which I did here) to generate new images of them. However, one can also use it to train a model to generate other things. Stable Diffusion does not have Clippy in its dataset (prompting "Clippy" generated nonsense, prompting "Clippy, which is a cartoon paperclip" generated discombobulated eyes with wires attached). So, in order to generate new images of Clippy, I needed to first train a new model.

Training

To train a Dreambooth model, one first needs to gather ~20-30 images of the target object. I was able to find a few images on google, but most of the clip art of Clippy is either very low-res, or copies of the same image. I gathered these images by grabbing frames from youtube videos of Clippy. The videos were pretty low-resolution, unfortunately, so the images were as well. However, I hoped that the diffusion model would be able to work past the low resolution. Here's some examples of the images I used for the first attempt:

Results

For the first attempt, I used images of Clippy in all his configurations (turning into a bicycle, etc). While the images generated had some elements that were clippy-like (bent silver wires, googly-eyes, sheets of yellow paper), they weren't very visually recognizable as Clippy.

Additionally, most of the images generated using this method had the original background of the input images. This makes sense - if we are telling Dreambooth "this is Clippy, make more of these" while giving a set of images that all have the same background, it is reasonable to expect that it would try to reproduce the given images, including the background.

Training Round 2

To solve this issue, I trained a new model using images of Clippy only in the "paperclip" configuration, and photoshopped those images to have different backgrounds. I then labeled the images with captions, including not only the new Clippy tag, but also a description of the background. For example, one of the images was Clippy in front of Times Square, which I captioned "[Clippy tag] in Times Square". This helps the model distinguish the background from the target.

Results Round 2

I generated a few videos, which I passed on to the amazing VJ MrImprobable who worked them into his set. As usual, I used the pipeline of generating with stable diffusion at 1080p, then interpolating one frame between each generated frame to smooth things out (using FILM), and then used Real-ESRGAN to upscale to 4k.